Spoken Content Retrieval - Lattices and Beyond

| Lin-Shan Lee (National Taiwan University) |  Lin-Shan Lee Lin-Shan Lee |

|---|

Multimedia content over the Internet is very attractive, while the spoken part of such content very often tells the core information. It is therefore possible to index, retrieve or browse multimedia content primarily based on the spoken part. If the spoken content can be transcribed into text with very high accuracy, the problem is naturally reduced to text information retrieval. But the inevitable high recognition error rates for spontaneous speech including out-of-vocabulary (OOV) words under a wide variety of acoustic conditions and linguistic context make this never possible. One primary approach, among many others, is to consider lattices with multiple hypotheses in order to include more correct recognition results. This talk will briefly review the approaches and directions along this line, not only search over lattices but those beyond, such as relevance feedback, learning approaches, key term extraction, semantic retrieval and semantic structuring of spoken content.

Osnova

0:00:43

Intro

0:02:00

Spoken Content Retrieval

0:05:55

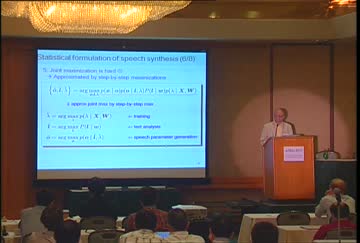

Fundamentals

0:15:01

Recent Research Examples (1) - Integration and Weighting

0:19:16

Recent Research Examples (2) - Acoustic Modeling

0:22:53

Recent Research Examples (3) - Acoustic Features and Pseudo Relevance Feedback

0:26:25

Recent Research Examples (4) - Improved Pseudo Relevance Feedback

0:33:06

Recent Research Examples (5) - Concept Matching

0:35:40

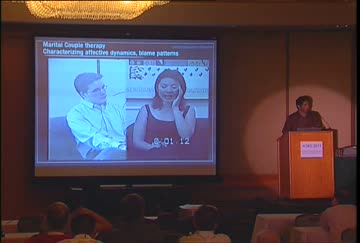

Recent Research Examples (6) - User-content Interaction

0:44:38

Link of demo system

0:52:55

Conclusion