A Maximum Likelihood Approach to SNR-Progressive Learning Using Generalized Gaussian Distribution for LSTM-Based Speech Enhancement

(3 minutes introduction)

| Xiao-Qi Zhang (USTC, China), Jun Du (USTC, China), Li Chai (USTC, China), Chin-Hui Lee (Georgia Tech, USA) |

|---|

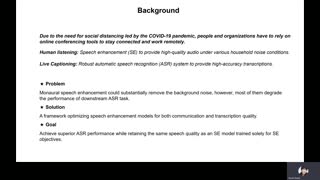

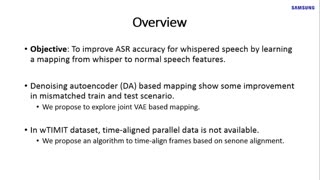

A maximum likelihood (ML) approach to characterizing regression errors in each target layer of SNR progressive learning (PL) using long short-term memory (LSTM) networks is proposed to improve performances of speech enhancement at low SNR levels. Each LSTM layer is guided to learn an intermediate target with a specific SNR gain. In contrast to using previously proposed minimum squared error criterion (MMSE-PL-LSTM) which leads to an un-even distribution and a broad dynamic range of the prediction errors, we model the errors with a generalized Gaussian distribution (GGD) at all intermediate layers in the newly proposed ML-PL-LSTM framework. The shape factors in GGD can be automatically updated when training the LSTM networks in a layer-wise manner to estimate the network parameters progressively. Tested on the CHiME-4 simulation set for speech enhancement in unseen noise conditions, the proposed ML-PL-LSTM approach outperforms MMSE-PL-LSTM in terms of both PESQ and STOI measures. Furthermore, when evaluated on the CHiME-4 real test set for speech recognition, using ML-enhanced speech also results in less word error rates than those obtained with MMSE-enhanced speech.