Contextualized Attention-based Knowledge Transfer for Spoken Conversational Question Answering

(3 minutes introduction)

| Chenyu You (Yale University, USA), Nuo Chen (Peking University, China), Yuexian Zou (Peking University, China) |

|---|

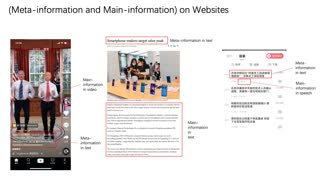

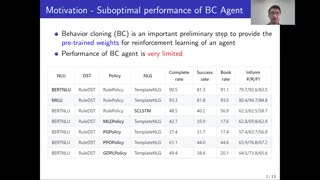

Spoken conversational question answering (SCQA) requires machines to model the flow of multi-turn conversation given the speech utterances and text corpora. Different from traditional text question answering (QA) tasks, SCQA involves audio signal processing, passage comprehension, and contextual understanding. However, ASR systems introduce unexpected noisy signals to the transcriptions, which result in performance degradation on SCQA. To overcome the problem, we propose CADNet, a novel contextualized attention-based distillation approach, which applies both cross-attention and self-attention to obtain ASR-robust contextualized embedding representations of the passage and dialogue history for performance improvements. We also introduce the spoken conventional knowledge distillation framework to distill the ASR-robust knowledge from the estimated probabilities of the teacher model to the student. We conduct extensive experiments on the Spoken-CoQA dataset and demonstrate that our approach achieves remarkable performance in this task.