IMPROVED SPEECH SEPARATION WITH TIME-AND-FREQUENCY CROSS-DOMAIN FEATURE SELECTION

(3 minutes introduction)

| Tian Lan (UESTC, China), Yuxin Qian (UESTC, China), Yilan Lyu (UESTC, China), Refuoe Mokhosi (UESTC, China), Wenxin Tai (UESTC, China), Qiao Liu (UESTC, China) |

|---|

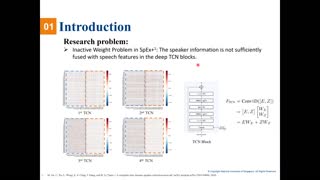

Most deep learning-based monaural speech separation models only use either spectrograms or time domain speech signal as the input feature. The recently proposed cross-domain network (CDNet) demonstrates that concatenated frequency domain and time domain features helps to reach better performance. Although concatenation is a widely used feature fusion method, it has been proved that using frequency domain and time domain features to reconstruct signal makes minor difference compared with only using time domain feature in CDNet. To make better use of frequency domain feature in decoder, we propose using selection weights to select and fuse features from different domains and unify the features used in separator and decoder. In this paper, we propose using trainable weights or the global information calculated from the different domain features to generate selection weights. Given that our proposed models use element-wise fusing in the encoder, only one deconvolution layer in the decoder is needed to reconstruct signals. Experiments show that proposed methods achieve encouraging results on the large and challenging Libri2Mix dataset with a small increasing in parameters, which proves the frequency domain information is beneficial for signal reconstruction. Furthermore, proposed method has shown good generalizability on the unmatched VCTK2Mix dataset.