ISCA Medalist: Forty years of speech and language processing: from Bayes decision rule to deep learning

| Hermann Ney (RWTH Aachen University) |

|---|

Abstract

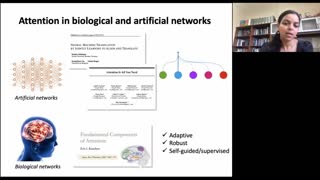

When research on automatic speech recognition started, the statistical (or data-driven) approach was associated with methods like Bayes decision rule, hidden Markov models, Gaussian models and expectation-maximization algorithm. Later extensions included discriminative training and hybrid hidden Markov models using multi-layer perceptrons and recurrent neural networks. Some of the methods originally developed for speech recognition turned out to be seminal for other language processing tasks like machine translation, handwritten character recognition and sign language processing. Today's research on speech and language processing is dominated by deep learning, which is typically identified with methods like attention modelling, sequence-to-sequence processing and end-to-end processing.

In this talk, I will present my personal view of the historical developments of research on speech and language processing. I will put particular emphasis on the framework of Bayes decison rule and on the question of how the various approaches developed fit into this framework.

Bio

Hermann Ney is a professor of computer science at RWTH Aachen University, Germany. His main research interests lie in the area of statistical classification, machine learning and neural networks with specific applications to speech recognition, handwriting recognition, machine translation and other tasks in natural language processing.

He and his team participated in a large number of large-scale joint projects like the German project VERBMOBIL, the European projects TC-STAR, QUAERO, TRANSLECTURES, EU-BRIDGE and US-American projects GALE, BOLT, BABEL. His work has resulted in more than 700 conference and journal papers with an h index of 100+ and 60000+ citations (based on Google scholar). More than 50 of his former PhD students work for IT companies on speech and language technoloy.

The results of his research contributed to various operational research prototypes and commercial systems. In 1993 Philips Dictation Systems Vienna introduced a large-vocabulary continuous-speech recognition product for medical applications. In 1997 Philips Dialogue Systems Aachen introduced a spoken dialogue system for traintable information via the telephone. In VERBMOBIL, his team introduced the phrase-based approach to data-driven machine translation, which in 2008 was used by his former PhD students at Google as starting point for the service Google Translate. In TC-STAR, his team built the first research prototype system for spoken language translation of real-life domains.

Awards: 2005 Technical Achievement Award of the IEEE Signal Processing Society; 2013 Award of Honour of the International Association for Machine Translation; 2019 IEEE James L. Flanagan Speech and Audio Processing Award; 2021 ISCA Medal for Scientific Achievements.