Metric Learning Based Feature Representation With Gated Fusion Model For Speech Emotion Recognition

(3 minutes introduction)

| Yuan Gao (Tianjin University, China), Jiaxing Liu (Tianjin University, China), Longbiao Wang (Tianjin University, China), Jianwu Dang (Tianjin University, China) |

|---|

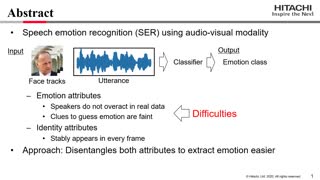

Due to the lack of sufficient speech emotional data, the recognition performance of existing speech emotion recognition (SER) approaches is relatively low and requires further improvement to meet the needs of real-life applications. For the problem of data scarcity, an increasingly popular solution is to transfer emotional information through pre-training models and extract additional features. However, the feature representation needs further compression because the training object of unsupervised learning is to reconstruct input, making the latent representation contain non-affective information. In this paper, we introduce deep metric learning to constrain the feature distribution of the pre-training model. Specifically, we propose a triplet loss to modify the representation extraction model as a pseudo-siamese network and achieve more efficient knowledge transfer for emotion recognition. Furthermore, we propose a gated fusion method to learn the connection of features extracted from the pre-training model and supervised feature extraction model. We conduct experiments on the common benchmarking dataset IEMOCAP to verify the performance of the proposed model. The experimental results demonstrate the advantages of our model, outperforming the unsupervised transfer learning system by 3.7% and 3.88% in weighted accuracy and unweighted accuracy, respectively.