Unsupervised Training of a DNN-based Formant Tracker

(longer introduction)

| Jason Lilley (Nemours, USA), H. Timothy Bunnell (Nemours, USA) |

|---|

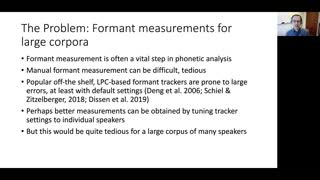

Phonetic analysis often requires reliable estimation of formants, but estimates provided by popular programs can be unreliable. Recently, Dissen et al. [1] described DNN-based formant trackers that produced more accurate frequency estimates than several others, but require manually-corrected formant data for training. Here we describe a novel unsupervised training method for corpus-based DNN formant parameter estimation and tracking with accuracy similar to [1]. Frame-wise spectral envelopes serve as the input. The output is estimates of the frequencies and bandwidths plus amplitude adjustments for a prespecified number of poles and zeros, hereafter referred to as “formant parameters.” A custom loss measure based on the difference between the input envelope and one generated from the estimated formant parameters is calculated and back-propagated through the network to establish the gradients with respect to the formant parameters. The approach is similar to that of autoencoders, in that the model is trained to reproduce its input in order to discover latent features, in this case, the formant parameters. Our results demonstrate that a reliable formant tracker can be constructed for a speech corpus without the need for hand-corrected training data.