Vision Transformer for Audio-based Primates Classification and COVID Detection

(Oral presentation)

| Steffen Illium (LMU München, Germany), Robert Müller (LMU München, Germany), Andreas Sedlmeier (LMU München, Germany), Claudia-Linnhoff Popien (LMU München, Germany) |

|---|

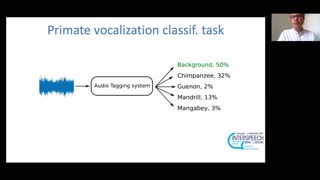

We apply the vision transformer, a deep machine learning model build around the attention mechanism, on mel-spectrogram representations of raw audio recordings. When adding mel-based data augmentation techniques and sample-weighting, we achieve comparable performance on both (PRS and CCS challenge) tasks of ComParE21, outperforming most single model baselines. We further introduce overlapping vertical patching and evaluate the influence of parameter configurations.