Weakly-supervised Speech-to-text Mapping with Visually Connected Non-parallel Speech-text Data using Cyclic Partially-aligned Transformer

(3 minutes introduction)

| Johanes Effendi (NAIST, Japan), Sakriani Sakti (NAIST, Japan), Satoshi Nakamura (NAIST, Japan) |

|---|

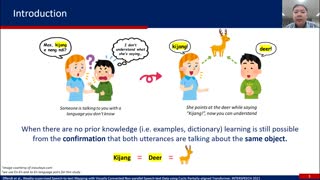

Despite the successful development of automatic speech recognition (ASR) systems for several of the world’s major languages, they require a tremendous amount of parallel speech-text data. Unfortunately, for many other languages, such resources are usually unavailable. This study addresses the speech-to-text mapping problem given only a collection of visually connected non-parallel speech-text data. We call this “mapping” since the system attempts to learn the semantic association between speech and text instead of recognizing the speech with the exact word-by-word transcription. Here, we propose utilizing our novel cyclic partially-aligned Transformer with two-fold mechanisms. First, we train a Transformer-based vector-quantized variational autoencoder (VQ-VAE) to produce a discrete speech representation in a self-supervised manner. Then, we use a Transformer-based sequence-to-sequence model inside a chain mechanism to map from unknown untranscribed speech utterances into a semantically equivalent text. Because this is not strictly recognizing speech, we focus on evaluating the semantic equivalence of the generated text hypothesis. Our evaluation shows that our proposed method is also effective for a multispeaker natural speech dataset and can also be applied for a cross-lingual application.