Selective Deep Speaker Embedding Enhancement for Speaker Verification

| Jee-Weon Jung, Ju-Ho Kim, Hye-Jin Shim, Seung-bin Kim, Ha-Jin Yu |

|---|

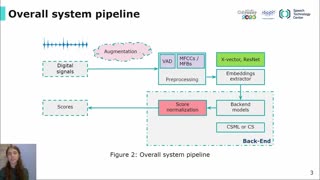

Utterances that are input from a distance are one of the major causes of performance degradation in speaker verification systems. In this study, we propose two frameworks for deep speaker embedding enhancement and specifically focus on distant utterances. Both frameworks input speaker embeddings extracted from front-end systems, including deep neural network-based systems, which widen the range of applications. We use speaker embeddings that are extracted by inputting raw waveforms directly into a deep neural network. The first proposed system, skip connection-based selective enhancement, adopts a skip connection that directly connects the input embedding to the output. This skip connection is multiplied by a value between 0 and 1, which is similar to the gate mechanism where the value is concurrently determined by another small deep neural network. This approach allows the selective application of enhancements, thus, when the input embedding is from a close-talk, the skip connection would be more activated. On the other hand, when embedding from a distance is input, the deep neural network would be more activated. The second proposed system, i.e., a selective enhancement discriminative auto-encoder, aims to find a discriminative representation with an encoder-decoder architecture. The hidden representation is divided into two subspaces with the objective to gather speaker information into one subspace by adding additional objective functions and letting the other subspace contain subsidiary information (e.g., reverberation and noise). The effectiveness of both proposed frameworks is evaluated using the VOiCES from a Distance Challenge evaluation set and demonstrates a 11.03 % and 15.97 % relative error reduction, respectively, compared to the baseline, which does not employ an explicit feature enhancement phase.