Utilizing VOiCES Dataset for Multichannel Speaker Verification with Beamforming

| Ladislav Mošner, Oldřich Plchot, Johan Rohdin, Jan Černocký |

|---|

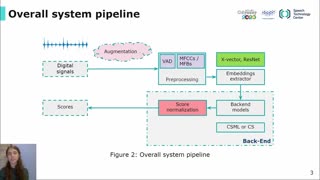

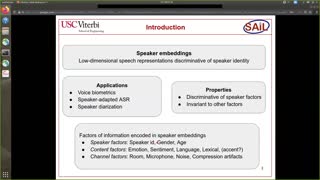

VOiCES from a Distance Challenge 2019 aimed at the evaluation of speaker verification (SV) systems using single-channel trials based on the Voices Obscured in Complex Environmental Settings (VOiCES) corpus. Since it comprises recordings of the same utterances captured simultaneously by multiple microphones in the same environments, it is also suitable for multichannel experiments. In this work, we design a multichannel dataset as well as development and evaluation trials for SV inspired by the VOiCES challenge. Alternatives discarding harmful microphones are presented as well. We asses the utilization of the created dataset for x-vector based SV with beamforming as a front end. Standard fixed beamforming and NN-supported beamforming using simulated data and ideal binary masks (IBM) are compared with another variant of NN-supported beamforming that is trained solely on the VOiCES data. Lack of data revealed by performed experiments with VOiCES-data trained beamformer was tackled by means of a variant of SpecAugment applied to magnitude spectra. This approach led to as much as 10% relative improvement in EER pushing results closer to those obtained by a good beamformer based on IBMs.