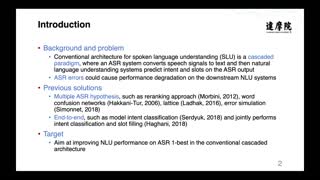

Integrating Dialog History into End-to-End Spoken Language Understanding Systems

(3 minutes introduction)

| Jatin Ganhotra (IBM, USA), Samuel Thomas (IBM, USA), Hong-Kwang J. Kuo (IBM, USA), Sachindra Joshi (IBM, USA), George Saon (IBM, USA), Zoltán Tüske (IBM, USA), Brian Kingsbury (IBM, USA) |

|---|

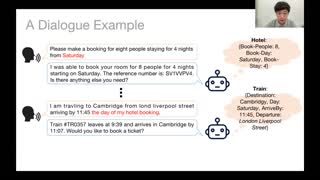

End-to-end spoken language understanding (SLU) systems that process human-human or human-computer interactions are often context independent and process each turn of a conversation independently. Spoken conversations on the other hand, are very much context dependent, and dialog history contains useful information that can improve the processing of each conversational turn. In this paper, we investigate the importance of dialog history and how it can be effectively integrated into end-to-end SLU systems. While processing a spoken utterance, our proposed RNN transducer (RNN-T) based SLU model has access to its dialog history in the form of decoded transcripts and SLU labels of previous turns. We encode the dialog history as BERT embeddings, and use them as an additional input to the SLU model along with the speech features for the current utterance. We evaluate our approach on a recently released spoken dialog data set, the HARPERVALLEYBANK corpus. We observe significant improvements: 8% for dialog action and 30% for caller intent recognition tasks, in comparison to a competitive context independent end-to-end baseline system.